The AI-generated images trend and what it could mean for NLG [Part 2]

by Sylvia Nguyen, NLP Engineer

If you missed the first part of this article, you can find it here. The previous part explored the topic of Artificial Intelligence as well as AI Art. It also explained what AI-generated images are and what trends and news are going viral on social media at the moment.

This part of the article deals with the highly controversial subject of AI-generated images from the point of view of an Ella Lab family member as well as a hobby artist and draws connections to the findings and their parallels to NLG.

From the POV of an Ella Lab family member

As a member of the Ella Lab family, I was very interested and invested when I first stumbled upon AI-generated images on several social media platforms. I’ve played around with some generative tools before, but the results from currently popular tools were way beyond what those other tools could do. They easily produced images with high-quality and stunning beauty. And just seeing those pictures, even way before researching and reading more about the technologies behind them, filled me with excitement and curiosity about the possibilities that AI technology in general still holds for the future.

Source: Midjourney Community Showcase

It also made me wonder how AI-generated images could be integrated properly into today’s society. And is the rise of its representation within social media already its limit? Can, or will, it change the process of creating art, and what effect would it have on the people working in that field? Could it show artists new ways to express themselves or help them out of art blocks? Could it help to envision first concepts or be a new source of inspiration?

As great as the results are and no matter how far AI technologies have come already, there is still a lot of room for improvement. In the case of AI-generated images, a second glance often reveals flaws. Obvious errors such as too many fingers on a character’s hand or unidentifiable details make it quite easy to differentiate between the work of a generative tool and that of a human. On top of mistakes that professional artists, who spent a lot of their time to practice and hone their skills, would most likely never make, there is another aspect generative tools currently aren’t capable of emulating: a consistent, recognizable style.

AI-generated images are, when specific themes are asked to be produced more than once, not quite consistent, since the model is only capable of predicting a satisfactory output instead of repeatedly reproducing a specific style. This is well demonstrated in the previously mentioned children’s book by Ammaar Reshi. The main character of his story possessed a few specific features that would make it easy for children to recognize her, but as shown in Reshi’s Midjourney generation history, the visual representation of the main character would change slightly over time. Even when she still can be identified as an established character, she seems a little different nonetheless. And it is consistency that is particularly important in visual storytelling.

That being said, the outcome of AI-generated images is only as good as the input data, which plays a big role in the debate about the ethics of artificially generated images. The technology as it exists right now is not able to recreate anything beyond the patterns of the given data and creativity and imagination can be imitated to only a certain extent. At the same time, the infamous Machine Learning phrase “garbage in, garbage out” applies, meaning that creators of Artificial Intelligence technologies have to look for high-quality data in order for their tools to perform well and produce an output of similar quality. On top of human-like creativity, which goes beyond gathering experience in the form of training data, analyzing the patterns and combining those to create something new, text-to-image models are also limited by the prompt they are given to generate content. The model is only able to work with what it receives in terms of information: it can neither make decisions on its own, nor draw conclusions and create connections between details.

This is precisely why the prompt is an important component in producing the best possible results. The process of improving the prompt – i.e. replacing parameters, changing variables – is called prompt engineering. It is also another aspect in which I am particularly interested as an NLP engineer. Which prompt produces which results? How must a prompt be structured or formulated? The better the human user understands how to “communicate” with the model, the better the results should be. After all, this knowledge and experience, as well as the resulting insights – what works, what doesn’t work, and what the limitations are – may eventually be useful in the field of NLG as well.

Speaking of useful: another point that makes this topic particularly exciting is the prospect of technology’s future. It is conceivable that AI tools will be able to support those who want to use them in their workflow. Artists could access assistance during creative blocks or seek inspiration before creating a new piece of art. AI image generation tools could become an extension to artistic creation, opening up new perspectives and possibilities beyond imagination. Just like the development of machines during industrialization, AI tools could optimize and simplify processes.

Turn a sketch & prompt into several variations of an AI-generated image. Source: ControlNet

From the POV of an art lover and hobby artist

At the beginning of this two-part article, I noted that I will refer to images generated by AI tools as AI-generated images instead of AI Art. So, why exactly do I think AI-generated images and art should be considered separately?

First of all, I would like to shed some light on the question “What is art?”. In my opinion, as someone who is very interested in art, I think art is like humankind – very hard to grasp in only a few words and multi-faceted. Art has many forms. It exists in the form of music, paintings, dance or theater and film. And it is completely up to one’s own subjective interpretation.

For some, art might be a way to express themselves and their feelings about the world around them or about society itself. For others, it is an escape from reality and the visualization of ideas or simply capturing everyday experiences, memories and feelings. It’s running free in creative energy and living out one’s passion. Sometimes art includes what a person can and what they cannot see, or what they refuse to see. Sometimes it’s loud and colorful, sometimes silent, yet deep. But no matter what art is for the individual – it is deeply linked to one’s own creative mind and imagination. And since an AI doesn’t possess such a free, creative mind to imagine and create according to those ideas, many artists refuse to address AI Art as such. Drastically speaking, AI doesn’t even possess any kind of intelligence to start with, let alone creativity, since it is only able to predict an acceptable output based on the huge data pool it is trained on. If you wanted to, you could also say that AI lacks soul and the heart, sweat and tears that artists pour into their works. So the answer to said question heavily depends on the individual perspective: do we measure the artistic value of a work by its creator or rather by the impact it has on the viewer like Art researcher Bernd Flessner expressed?

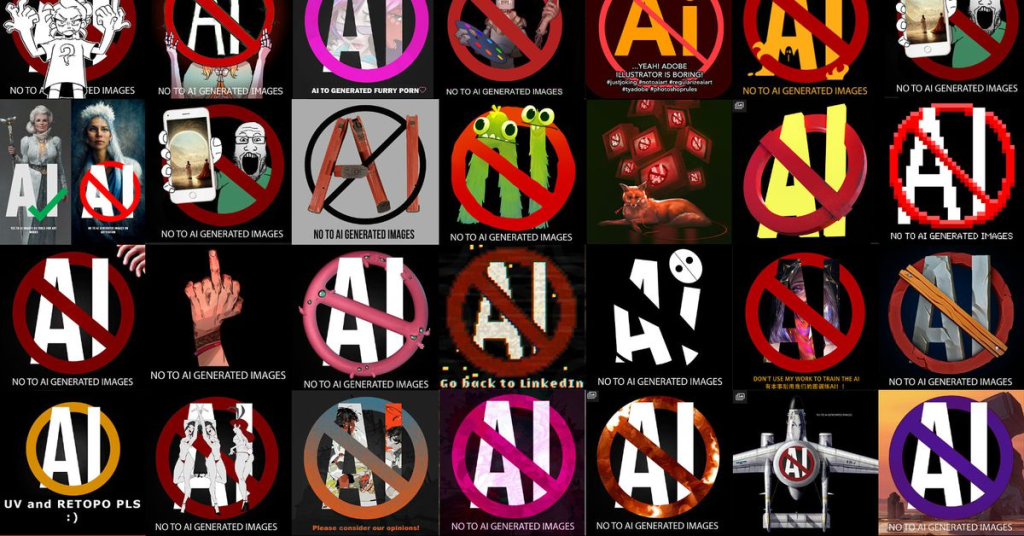

Artists protest AI-generated images on Artstation. Source: @joysilvart

Calls for a ban on AI-generated images are getting louder and louder because of this. Artists on the platform ArtStation, for example, have joined together in a protest by expressing their displeasure with artificially generated images shown on the platform’s Explore page. The works that were produced by AI image generators were seen as disrespectful to the time and hard work invested by artists. However, a general ban on AI-generated images on platforms like ArtStation could be a shot in the dark: according to ArtNet, for example, an artist was banned from a Reddit forum because his posted work looked like an AI-generated image. This case reveals how advanced the technology truly is.

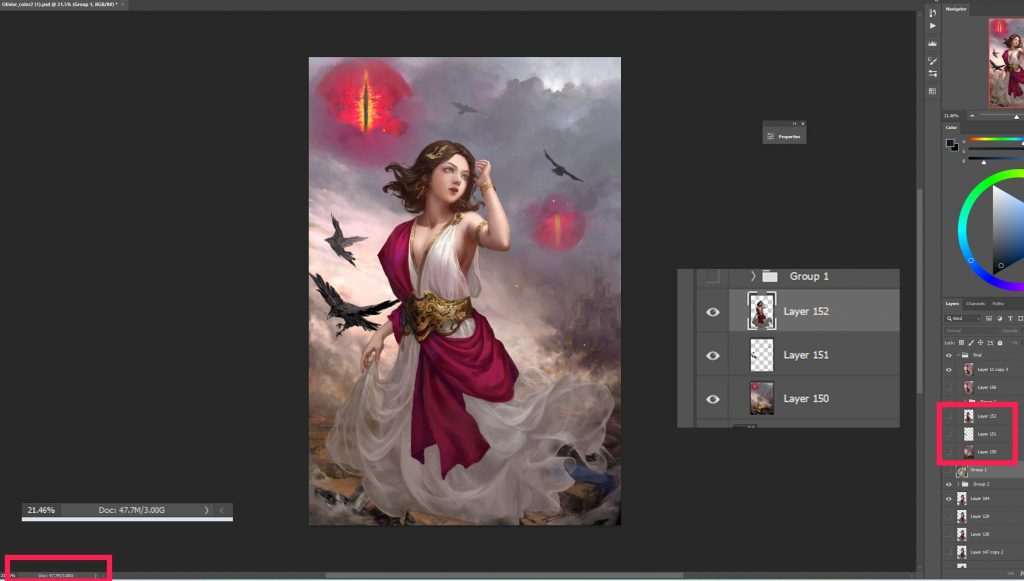

A picture that was banned from Reddit for looking like generated by an AI tool. Source: @benmoran_artist

I, personally, can spend hours looking at all kinds of images on the internet – be it works by human artists or images generated by AI tools. But as much as the technology and its outcomes fascinate me, I am still a fan of buying art books or prints from my favorite artist. If anything, I simply hope that art created by humans and AI-generated images can coexist under appropriate conditions and regulations that protect the rights of creative minds.

The bigger issue however, is not exactly the technology behind AI-generated images, it is rather the data that the technology is working with. As mentioned in the previous part of this blog post, the models behind the generated images use large datasets of text-image pairs as training data.

There are different ways of data collection: e.g. the creation of a dataset and the accompanying manual collection of images as well as the manual annotation of image descriptions to get text-image pairs. However, this requires a lot of time and care, but is rewarded with a specific dataset with a high quality. Then there is the possibility of using existing datasets such as COCO (Common Objects in Context), which are used for tasks like image generation. Another possibility is the so-called practice of ‘crawling’: the crawler, a computer program, systematically and repetitively browses the internet and extracts information, such as the structure and content of webpages, to save in a database. The Common Crawl project, for example, makes the information collected in this way available to scientists and developers. From this huge dataset, a dataset with text-image pairs can then be obtained for training models that can generate images.

This form of data collection in particular causes bellyaches for many artists: they fear that their published work could be crawled from the web without their consent and misused for training image generation tools. The artists’ concerns are therefore not only based on the threat to their livelihood, but also on the fear that their work will be stolen and misused for training purposes against their will.

Even in the time before AI-generated images flooded the web, artists have had to contend against art theft, especially online: for example, artworks are often re-uploaded by thieves, who pass them off as their own or are blatantly plagiarized. In addition to this, potential customers can now use AI-driven image generation tools to generate images on their own, quickly and easily – all on the backs of those from whose pens the original images may have come. The accusations of art theft were pretty hard to prove, but with Stable Diffusion becoming open-source, according to Andy Baio, software developer Simon Willison was able to extract the data the model was trained on. They provided the found information to the general public, confirming what many artists around the globe had feared: Stable Diffusion was trained on three large datasets, namely LAION-2B-EN, LAION-High-Resolution as well as LAION-Aesthetics v2 5+, which consist of HTML-image tags with image descriptions, text-image pairs classified by language and filtered by resolution, watermarks as well as a score based on aesthetically pleasing visuals. Images from several websites such as Pinterest, Tumblr, RedBubble or DeviantArt were used for training. This, in turn, means that not only professional artists, but also hobbyists are affected.

A collection of artists to advertise different styles in Stable Diffusion. Source: @arvalis

Therefore, this fear and anger towards AI-generated images are absolutely understandable and justified, however I also think that creative minds should not project their feelings onto the models and tools, but instead redirect them toward those, who refuse to acknowledge their work and illegally take it to train their models.

What does this mean for NLG?

So what conclusions can we draw from all this?

Artificially generated images and human art both have their benefits and drawbacks. While AI-tools may generate a great number of images that can basically be used right after generation in a fast and easy way, their results are only as good as the given data and the prompt used. Without human input, it is neither able to create on its own, nor decide on what to generate next. Human art on the other hand is more time-consuming, since humans require – depending on the artist’s skill and experience – a longer period to find an idea or inspiration, prepare a concept and ultimately work on the piece itself. But this process, time and mind put into the resulting artwork is what makes human art pieces so authentic, charming and unique in particular. Aside from that, art is not just a monetary market for artists, it’s so much more, which is why it shouldn’t be trampled on by copyright infringement or plagiarism. Artist’s voices of concern shouldn’t be left unheard.

Instead, unsettled questions that arise with the growth of AI in society should be answered as regulations are urgently needed to protect copyright: who exactly holds the copyright of generated images? Is it the tool that generated them, the programmer who programmed the model or the person who trained it? Maybe the user who entered the prompt into the tool? Or rather the people whose artworks were – whether with or without their knowledge – used as training data?

Regulations for AI-generated images within our constantly developing society therefore could for example include art platforms for professional and hobby artists that can’t be crawled for images. Models should be restricted from being trained on data from artists who did not consent to their work being used as training data. Tools as well as the dataset used to train them need to be transparent and publicly available and have to include safety filters to ensure the technology cannot be misused for e.g. deepfake porn. Safety filters therefore not only need to ensure that images containing explicit content or content glorifying violence cannot be generated, but also need to block explicit queries involving e.g. celebrities. Furthermore, labeling of AI generations should be considered to avoid deception.

Regulations comparable to these could also be passed on to other AI-powered fields such as Natural Language Generation (NLG). Similar to AI-generated images, NLG basically follows the principle of text-to-text. This means it is the production of text after receiving a human input e.g. in the form of a writing prompt. An example of NLG is OpenAI’s ChatGPT that went viral last November, receiving lots of attention from the general public. With its easy-to-use interface, users were fascinated by the seemingly endless possibilities of text generation. Without much effort, users were able to generate recipes or whole essays with just one prompt. However, NLG still has – just like AI-generated images – its limits which need to be communicated. OpenAI’s documentation states that generations “may occasionally generate incorrect information” and “may occasionally produce harmful instructions or biased content”. Clarifications like these are a first step for AI-generated text to be integrated into public use. Moreover, NLG tools need to restrict harmful or highly inappropriate prompts to exclude explicit content from being generated and to avoid abuse of these tools. The Intercept for example reported about ChatGPT generating intensely racist content.

Authors of all kinds of genres may be in a similar uproar as artists with AI-generated images, so it is inevitable that it will need to be clarified that the tools exist to support human writing and not to replace it. Another problem in artificially generated text produced by tools such as ChatGPT is the potential of incorrect information in the created output. Similar to the issue of Deepfakes, this can cause great unrest and spread misinformation. Therefore, factual correctness and avoiding hallucination of language models should be particularly relevant for NLG.

AI in general has become a huge and trending topic and will soon revolutionize the technical and digital world. Even with the large amount of concerns and criticism the AI community is facing, AI will undeniably make its way into all areas of life. With the continuation of evolving AI, it is therefore important to consider the ethical implications of its use in generating and sharing results on social media or using it for profit.

But as our society keeps on developing, humans and their need for new innovations and experiences will develop as well. So why not make use of these innovations to explore other angles and refreshing perspectives that come with the technology? AI generation tools are about creating a new form of work process, a new way that combines the results of human thinking and machine learning algorithms. Through AI-generated images and texts we can create something that reflects advances in technology, art, and humanity. Regardless of their views regarding AI technology, everyone should take the time to try to understand what machines these days are now capable of. By doing so, they might be able to appreciate the creations that come out of this fascinating collaboration between humans and new technologies.

AI tools are after all supposed to support those who want to use them and to help people to optimize working processes and make working easier. It’s a technology made to be used by humans, not to replace them. Neither AI-generated images nor NLG are about taking over creative tasks. Instead, both technologies are developed to enhance the workflow. Art and natural language belong to the human mind and will keep on existing, with or without AI technology.